Context Rot: Why AI Gets Worse the More You Use It (And How to Fix It)

By ModelCouncil Team

There's something the AI companies don't advertise: the more you use their tools, the worse they get.

If you've used ChatGPT or Claude for any serious project, you've felt it. The first few responses are sharp. By message 30, the model is repeating itself. By message 50, it's contradicting things it said earlier. You start a new chat and re-explain everything from scratch.

For casual use, that's annoying. For business decisions, it's dangerous.

The Science Behind the Problem

In December 2025, researchers from MIT published a paper called "Recursive Language Models" that put a name to what practitioners already knew: context rot.

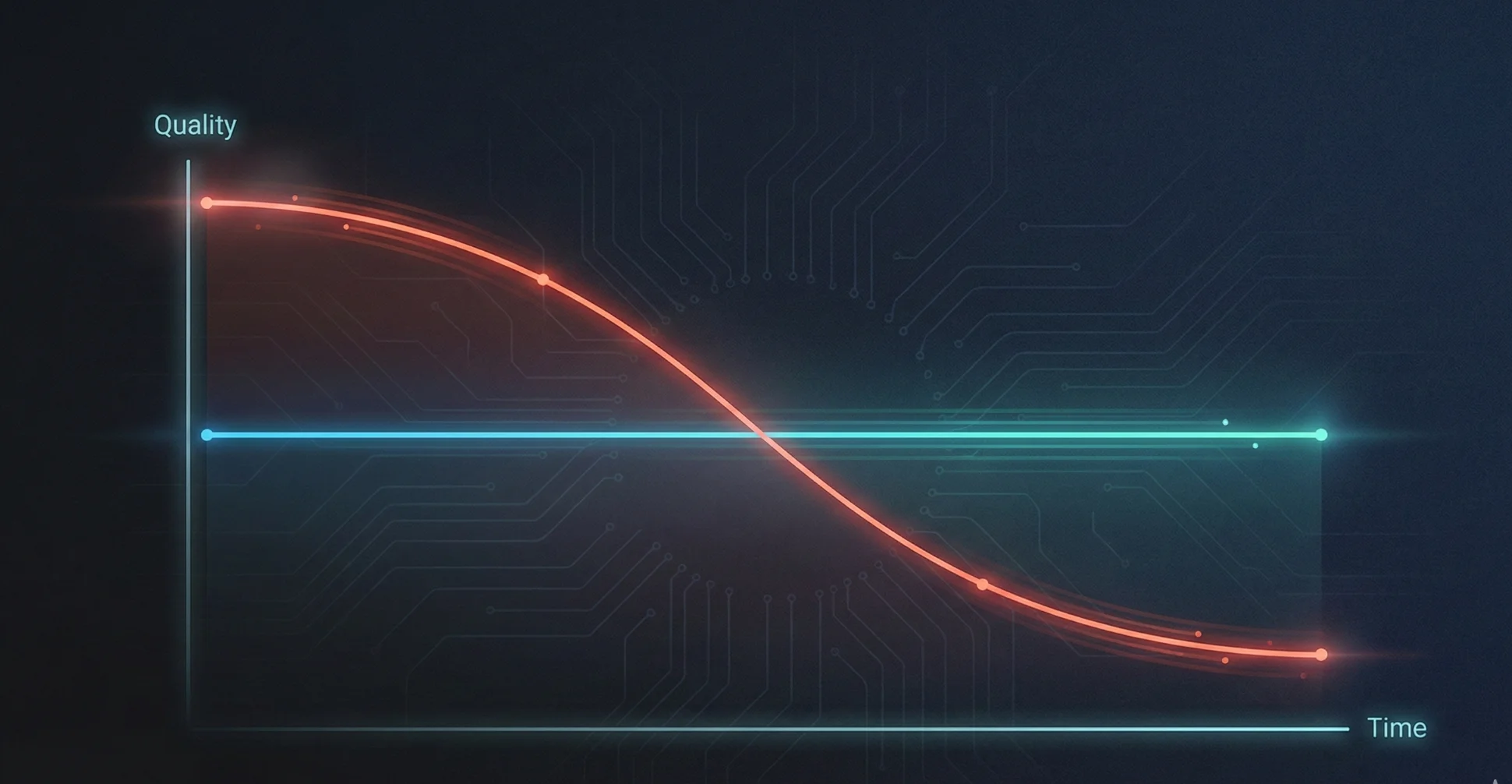

Context rot is the documented phenomenon where LLM quality systematically degrades as the context window fills up. The more history you include, the more the model struggles to find relevant information, maintain consistency, and produce accurate outputs.

The kicker? This affects even frontier models. The MIT team showed that GPT-5—the most capable model available—exhibits significant quality degradation as prompts get longer. It's not a bug in older models. It's a fundamental limitation of how these systems work.

Why This Matters for Business

When you're using AI for important decisions, context rot creates a perverse dynamic:

The more context you provide, the worse the output.

Think about what that means for a real project:

- You upload your strategy documents, competitive analysis, and board deck

- You have a productive first session exploring options

- You come back the next day with follow-up questions

- The model has "forgotten" key constraints you established

- Its recommendations contradict yesterday's conclusions

- You start over, re-uploading everything, re-establishing context

Sound familiar?

This is why executives end up with dozens of orphaned ChatGPT threads. Each one started fresh because the previous one degraded. All that context—all those decisions—lost.

The Approaches That Don't Work

Bigger context windows don't solve this. Google's Gemini can handle a million tokens. The MIT research shows that model quality still degrades well before you hit that limit. Having a bigger bucket doesn't help if the water still goes stale.

Summarization loses information. Some tools try to compress your conversation history into summaries. But summaries are lossy by definition. The nuance that mattered for decision #12 gets flattened out by the time you reach decision #30.

Starting fresh loses continuity. The obvious workaround—just start a new chat—means you lose all accumulated context. You're back to explaining your situation from scratch, every time.

A Different Architecture

The MIT paper proposes an interesting solution: instead of stuffing everything into the context window, let the model programmatically query its context. Treat history as a database to search, not a document to read.

We built ModelCouncil with this insight in mind.

Instead of growing context, we manage it.

Here's how:

Decision Compression: Every Decision Board gets compressed to its structured essence—the recommendation, key reasons, consensus, and primary risk. Not lossy summarization, but structured extraction that preserves signal in ~200 tokens.

Intelligent Retrieval: Your documents get chunked and embedded. When you ask a new question, we retrieve only the relevant chunks—not everything. Your project can have 50 documents; we surface the 3 paragraphs that matter.

Thread Architecture: Related decisions live in threads. When you continue a thread, we include compressed history. When you branch to explore an alternative, we track lineage but start fresh context.

The result: your context stays around 20,000 tokens regardless of whether you're on decision 1 or decision 50. Quality stays constant.

Query 50 = Query 1

This isn't just a performance optimization. It's a fundamental shift in how AI tools can work for business.

Traditional chat interfaces face an impossible tradeoff: include more history (and risk context rot) or start fresh (and lose continuity). You're always choosing between quality and context.

ModelCouncil breaks that tradeoff. We remember what you decided without degrading what we can do next.

Your 50th decision gets the same quality context as your first. That's not marketing—it's architecture.

The Parallel Evolution

Here's something interesting: we weren't the only ones thinking about multi-model approaches to improve AI reliability.

Around the same time we were building ModelCouncil, Andrej Karpathy—former Tesla AI Director, OpenAI founding team member—open-sourced a project called LLM Council. Same core insight: multiple models surface perspectives that any single model misses.

Different directions though. His scores answers for AI research and benchmarking. Ours synthesizes them into business decisions with context that compounds.

The multi-model approach isn't just our idea. It's where serious AI practitioners are converging.

What This Means for You

If you're using AI for anything beyond casual questions, context rot is affecting your work. You might not have named it, but you've felt it.

The symptoms:

- Starting new chats because old ones "got weird"

- Re-explaining your situation repeatedly

- Inconsistent recommendations across sessions

- Forgetting constraints you established earlier

The fix isn't a bigger context window or a better prompt. It's a different architecture—one that manages context intelligently instead of just accumulating it.

That's what we built.

*ModelCouncil gives you multiple AI perspectives on every decision, with context that compounds instead of decays. Start your 14-day free trial →